Continue on with your day.

Tuesday, December 6, 2011

Biostatistics Ryan Gosling

In celebration of my first biostatistics class, I will point you to the newest of the Ryan Gosling memes.

Monday, December 5, 2011

Generous, but a little dirty

“Jacques wanted to be logical, even purely logical, while he considered me as being mainly intuitive. Which would not have disturbed me if he had not injected into his remarks a bit of irony, even scorn. But it was not enough for him to be logical. Nature also had to be logical, to function according to strict rules. Having once found a ‘soution’ to some ‘problem,’ it had to stick to it from then on, to use it to the end. In every case. In every situation. In every living thing. In the last analysis, for Jacques, natural selection had sculpted each organism, each cell, each molecule down to the tiniest detail. To the point of attaining a perfection ultimately indistinguishable from what others recognized as the sign of divine will. Jacques ascribed Cartesianism and elegance to nature. Hence his taste for unique solutions. For my part, I did not find the world so strict and rational. What amazed me was neither its elegance nor its perfection, but rather its condition: that it was as it was and not otherwise. I saw nature as a rather good girl. Generous, but a little dirty. A bit muddle-headed. Working in a hit-or-miss fashion. Doing what she could with what was at hand. Hence, my tendency to foresee the most varied situations…”

| — | Excerpted from The Statue Within, Francios Jacob |

Monday, November 21, 2011

What we're up against

|

| E. coli click for source. |

Everyone knows the last place you want to get stuck for a long time is a hospital. Hospitals are breeding grounds for bacteria. Disease-causing bacteria. Bacteria that are getting smarter. “Getting smarter” is a great euphemism for evolution. The same way we learn and adapt to new situations, bacterial pathogens adapt better to human hosts, develop ways to evade the immune response of their host, and become more resistant to antibiotics. (See MRSA) They do this simply by accumulating mutations in their genomes. The ones that are beneficial cause them to multiply, the ones that aren’t, well, those don’t do so well. That, my friends, is evolution.

Evolution is easy to see on this small scale because of a relatively small genome size (compared with say, mammals, but this isn’t necessarily indicative of organism complexity) and generation time. Given the right conditions, E. coli (your general run-of-the-mill bacterium) can double in 20 minutes. I can’t think of anything that I can do in 20 minutes. To put it in perspective, while you’re busy watching 30 Rock on Netflix, Your Favorite Bacterium has completed a generation time and is working on another. How’s that to make you feel lazy. Actually it shouldn’t. That has no bearing on your personal TV choices. However, because this bacterium is constantly churning out new generations, a random mutation that say, allows one bug to use oxygen more efficiently, and say, generate then 2x faster than the one without the mutation, when you’re speaking in time frames of twenty minutes, that makes a big difference to the bacteria whose sole purpose is to produce as much offspring as it possibly can. (Live long and prosper, etc.)

The scary part is that while scientists and doctors are at the metaphoric first floor of disease etiology, bacterial pathogens are racing up flights of stairs, using the elevator, and are miles ahead of us as fast as you can say “candidate gene”.

|

| click for source |

The cool thing is, we’re starting to catch up. In the most recent issue of Nature Genetics Lieberman et al use some pretty interesting techniques to take a comprehensive look at bacterial evolution. High throughput sequencing has made it possible to actually trace the specific mutations driving the evolution of these bacterial pathogens.

Lieberman et al looked at the genetic adaptation of a single bacterial strain in multiple human hosts during the spread of an epidemic. Specifically, Burkholderia dolosa, which is a bacterial pathogen that is associated with cystic fibrosis. In the 1990s, 39 individuals with cystic fibrosis were infected, and bacteria was isolated from each, and routinely frozen. The researchers then followed each patient over the course of 16 years, isolating samples of B. dolosa along the way.

What scientists can do with sequencing data

Scientists sequenced the whole genome of every bacteria isolated. They focused their analysis on SNPs (single nucleotide polymorphisms), since other genomic variations (structural and mobile elements) are a little harder to interperet. They were able to identify that mutations were accumulating at about 2 SNPs per year, which is consistent with bacterial mutation-fixation rates (the rate that a mutation becomes "fixed"--or stable within that genome). Due to the nature of the study (at how many time points scientists had isolated bacteria) they were able to document enough genetic diversity within that single strain that they were able to look at the evolutionary relationships among the isolates. Because this is such an infectious bacteria, the amount of data collected allows them to trace the path of infection from person to person. How cool is that? Unless you’re the person that started it all, and then, oops, should have stayed home from work that day.

They were also able to look at the evolution at a gene level. They looked at two specific phenotypes: resistance to ciprofloxacin (which is frequently prescribed to individuals with cystic fibrosis), as well as the presentation of O-antigen repeats in the lipopolysaccharide of the bacterial outer membrane, which plays a key role in virulence.

What they found was that in each of these phenotypes, a single gene was implicated, and using the phylogenetic data, they were able to determine that multiple mutations present in an individual were accrued independent of each other.

Here’s where statistics comes in. Since all evolution is about is random mutations, in order to demonstrate that a selective pressure is at work, you need to show that what you found was happening more than just by chance. They found 561 independent mutational events in 304 genes. Assuming a neutral evolution model (everything happening by chance, no selective pressure), they expected to see these mutations randomly distributed among all of the 5,014 B. dolosa genes, and it would be rare for a gene to acquire more than a single mutation. Instead, a lot of genes contained multiple mutations; four in particular had more than ten.

There are of course sites of mutational bias. In humans, these are genetic loci including olfactory receptors, and genes contributing the immune system. Genes that just happen to acquire more mutations as a response to the environment. They ended up identifying that 17 of the genes with mutations were not mutational hotspots, but underwent adaptive evolution under the pressure of natural selection.

What we can learn from this

High throughput sequencing and computational analysis are finally tools that can allow us to delve into the world of ever-evolving disease-causing bacteria. In a clinical setting this has a powerful impact; being able to tailor antibiotics to a particular genotype of bacteria would be a more efficient way of fighting disease. Not to mention that we’re now able to look at evolution happening in real time. How's that for catching up?

|

| click for source |

This post has been submitted for the NESCent 2011 ScienceOnline Blog Travel Award Contest.

Monday, November 14, 2011

Useful, Time-waster, or Useful time-waster?

|

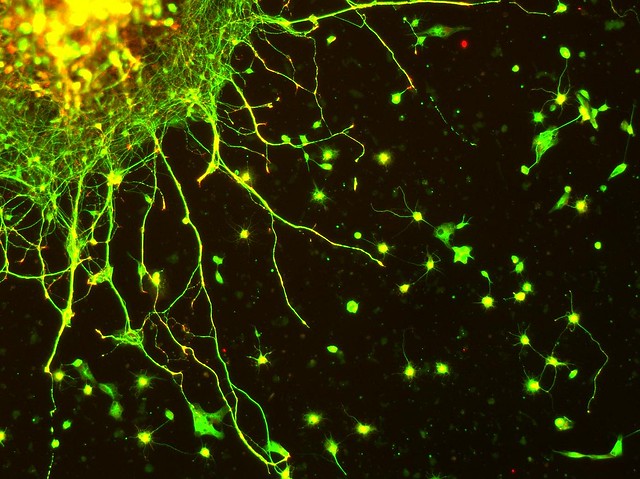

| Edinger-Westphal neurons in culture. Stained for Tubulin (green) and Synapsin (red) Image via flickr |

Its tagline is "The database of useful biological numbers", for when you just need a number and you end up poring through text books or endless articles when you need, the radius of an ATP molecule (~.7 nm) or the mean excitatory postsynaptic currents (EPSCs) amplitude of C. elegans. (38 +/-2.1 Hz), gosh I always forget that one, or just, overall human genome size (~3.08e9 bp). Oh, and an adult male brain has an average of 8.61e10 +/-8.12e9 neurons. Which makes perfect sense.

You search for something, it pops up in a handy table, and it even comes with a citation, so you know, you don't have to cite Bionumbers in whatever homework assignment/paper you're doing.

It looks like fun. I just spent a lot of time under Amazing BioNumbers and found out that the characteristic heart rate of a pond mussel was 4-6 beats per minute.

I would classify that piece of knowledge as could-be-conceivably-useful-in-conversation-time-waster.

Those systems biologists, what will they come up with next.

Friday, November 11, 2011

How To Read a Really Complicated Paper

Because I am a glutton for punishment/really know how to party, I chose a really complicated/awesome paper to present for journal club this coming week.

Some of my criteria for choosing a Really Complicated Paper are:

MicroRNA-137/181c Regulates Serine Palmitoyltransferase and In Turn Amyloid B, Novel Targets in Sporadic Alzheimer’s Disease

PMID: 21994399

So here we go. My tips and tricks for reading a paper.

Some of my criteria for choosing a Really Complicated Paper are:

- An unpronounceable protein in the title (Serine Palmitoyltransferase, anyone?)

- Lots of equations

- Lots of figures

- Microsoft Word only recognizes half of words in the title as actual words.

MicroRNA-137/181c Regulates Serine Palmitoyltransferase and In Turn Amyloid B, Novel Targets in Sporadic Alzheimer’s Disease

PMID: 21994399

So here we go. My tips and tricks for reading a paper.

- Get comfortable. We’re going to be here for a while. I include coffee in this step.

- Be an active reader. I used to silently make fun of my freshman year roommate when she would line up all of her differently colored highlighters before doing a reading for class. Little did I know, that nothing makes you unretain information more than just mindlessly skimming through a paper. Who would have thought! When I’m reading reading a paper, I’m armed with a highlighter for important words/phrases/concepts, and a pen for notes to myself.

- It’s all about you. Remember that pen you had from step two? While some of your notes might be, “look this up” or “wtf.” Reserve some space for applying what you’re reading to your research/interests. See a method that you might use four years from now if all your experiments go perfectly to plan? Star that one. Applying things from the paper you’re reading to what you’re doing is a great way to retain knowledge. There has got to be a study about that. It must reinforce some short-term/long-term memory pathway loop something that just helps you remember it. Plus, then you just learned something applicable to what you do. Bam.

- Prioritize. I tend to read Abstract-->Introduction--> Discussion-->Results-->Methods. Papers aren't novels, there's no right or wrong way to read them.

- Do a presentation. Volunteer to present a paper that you're reading for a journal club. Making and practicing a presentation will help you understand the paper. As you pull out figures and rethink your own captions and what you're going to say, you'll "connect" with your paper all the more. You'll also get some feedback and insight from other members of your lab/journal club.

- PDF vs. Full Text. I like printing papers out and taking them with me to get coffee, sometimes taking them to lunch (I'm such a great date!), and reading and re-reading and highlighting and writing. I print out the .pdfs to do this. It's important to remember that .pdfs are destined for the print version of a journal, so figures and tables aren't always (or, almost never) in a comprehensible order. If this is messing you up, try going to the full text version online. It's not as pretty as a .pdf, but the figures are right there in text, so when the results refer to a table or a figure, that table or figure is usually directly below it, in a handy window that you can pop out.

And finally,

- Try not to procrastinate. It's hard getting through a paper that's really dense. You just have to sit down and do it. I'm really bad at this. For instance, instead of reading my paper and working on my journal club presentation, I wrote this entry.

Back to the paper!

Any other tips that you have that I missed? What are your secrets to reading a paper?

Friday, September 9, 2011

Preparing for a meeting

I'm going to my First Ever Scientific Meeting tomorrow, in DC. I am beyond excited, and just an itty bitty nervous, but I have received the best advice ever before going. I figured I'll share that now, and then recap when I get back what worked the best.

Dress code:

Business casual, but don't look like a lawyer.

It's better to be over dressed than under dressed, unless you have Nobel prize winning data. Then you can wear whatever you want.

This one is my favorite: Dress as seriously as you wish to be perceived. Which I think is exceptionally helpful for those of us that have round faces and tend to look a lot younger than we really are.

For the poster:

Put yourself out there. As people walk by, stop them and say, "Can I tell you about my poster?" Otherwise, no one will stop. And if you ask, they'll be guilted in to saying yes.

This goes along with put yourself out there, but be engaging. Eye contact, and all that. Introduce yourself within the first sentence.

Don't undermine your data/shoot yourself in the foot. Play up that negative result!

Anything I'm missing? I'm all going-to-my-first-meeting jittery. I'm super excited. Hopefully I'll come back with good stories. And no tears.

Dress code:

Business casual, but don't look like a lawyer.

It's better to be over dressed than under dressed, unless you have Nobel prize winning data. Then you can wear whatever you want.

This one is my favorite: Dress as seriously as you wish to be perceived. Which I think is exceptionally helpful for those of us that have round faces and tend to look a lot younger than we really are.

For the poster:

Put yourself out there. As people walk by, stop them and say, "Can I tell you about my poster?" Otherwise, no one will stop. And if you ask, they'll be guilted in to saying yes.

This goes along with put yourself out there, but be engaging. Eye contact, and all that. Introduce yourself within the first sentence.

Don't undermine your data/shoot yourself in the foot. Play up that negative result!

Anything I'm missing? I'm all going-to-my-first-meeting jittery. I'm super excited. Hopefully I'll come back with good stories. And no tears.

Thursday, August 18, 2011

Poster woes

About six months ago, our PI sent around an email alerting us to a meeting and saying that anyone who wanted to go could submit a poster and go.

Apparently six months ago I was bright eyed and bushy tailed and excited about an international meeting (for, uh, people not in the US), and science!, and hotels! And I was working on a project that I thought would make a good poster, so I wrote up and abstract, ran it by the boss, and submitted it. Lo and behold it was accepted! And I was very excited until someone kindly told me that pretty much everyone gets posters accepted, because, well, you can just put up a poster anywhere. Ok. So, a little bit of a let down, because it's like getting the "Most Improved" award on a sports team, because you just know that everyone gets an award because they can't just not give you one, but clearly you were not the most valuable or had the most headers. (Note to children's sports coaches, to an overly-sensitive 9 year old, getting "Most Improved" is pretty much the Worst Thing Ever.)

Well anyway, back to the saga of my poster. The problem was, that when I wrote my abstract and submitted my poster six months ago, I figured that after six months I would get the beautiful results that I hypothesized and make a brilliant poster and then I would get discovered and receive an honorary PhD and a book deal. (I think big, ok?) And of course, this does not happen. Not only do I not have great results, but I am also lacking on actual data. There were some quality issues and long story short, well, there isn't really a great system for detecting copy number variations between monozygotic twins.

The meeting is in three weeks, and my PI wants to see my poster by the end of next week to have time to look and critique and change. Oh perfect! That means I have a week! I'll start today!

And this is what I have so far.

Too bad you can't actually put crickets chirping on a poster. Because that is what my powerpoint slide right now is doing at me. It's chirping.

So what am I supposed to do? Pictures? I'm thinking of bringing some of my daughters stickers from home and maybe decorating it some. You know, draw their eyes away from the big empty space that is, well, the entire poster. Maybe some sort of post-it note collage? Anyone have any ideas when, you know, you have to distract people from the fact that you don't have any results?

Apparently six months ago I was bright eyed and bushy tailed and excited about an international meeting (for, uh, people not in the US), and science!, and hotels! And I was working on a project that I thought would make a good poster, so I wrote up and abstract, ran it by the boss, and submitted it. Lo and behold it was accepted! And I was very excited until someone kindly told me that pretty much everyone gets posters accepted, because, well, you can just put up a poster anywhere. Ok. So, a little bit of a let down, because it's like getting the "Most Improved" award on a sports team, because you just know that everyone gets an award because they can't just not give you one, but clearly you were not the most valuable or had the most headers. (Note to children's sports coaches, to an overly-sensitive 9 year old, getting "Most Improved" is pretty much the Worst Thing Ever.)

Well anyway, back to the saga of my poster. The problem was, that when I wrote my abstract and submitted my poster six months ago, I figured that after six months I would get the beautiful results that I hypothesized and make a brilliant poster and then I would get discovered and receive an honorary PhD and a book deal. (I think big, ok?) And of course, this does not happen. Not only do I not have great results, but I am also lacking on actual data. There were some quality issues and long story short, well, there isn't really a great system for detecting copy number variations between monozygotic twins.

The meeting is in three weeks, and my PI wants to see my poster by the end of next week to have time to look and critique and change. Oh perfect! That means I have a week! I'll start today!

And this is what I have so far.

Too bad you can't actually put crickets chirping on a poster. Because that is what my powerpoint slide right now is doing at me. It's chirping.

So what am I supposed to do? Pictures? I'm thinking of bringing some of my daughters stickers from home and maybe decorating it some. You know, draw their eyes away from the big empty space that is, well, the entire poster. Maybe some sort of post-it note collage? Anyone have any ideas when, you know, you have to distract people from the fact that you don't have any results?

Tuesday, June 28, 2011

The Inevitable

I took a break from the lab last week, and headed up with my boyfriend to his brother's wedding at their family farm in Virginia. It was beautiful, just on the edge of Shenandoah National Park, it was a break from the heat and the monotony of work.

Which brings me to the inevitable. Meeting new people, and answering the question, "so what do you do?"

Around scientists this is easy. I may feel slight embarrassment when explaining what I do to molecular biologists, because sometimes there is slight shame in "discovery science" (which there shouldn't be, but that's another topic for another day.) I work in human genetics. It'sharder impossible to design an elegant experiment to test a hypothesis when what you're interested is humans.

But what do you say around people with no science background at all? It's especially hard because my boyfriend is one of those do-gooder types. If we're standing next to each other and someone asks what he does, he says, "I'm starting my masters in social work, and I'm specifically interested in resettling African refugees." To which you just watch people swoon. Everyone knows what social work is, and everyone knows it's such a noble profession where you tend to be over worked and underpaid, so this makes A (the boyfriend) just the bee's knees at social events. And then they look over at me, and I'm all, "human genetics, yo" and a few things happen.

For one, the conversation tends to come to a complete stop. It's actually a great talent of mine, to bring happy light-hearted conversations to a screeching halt. I'm kind of awkward. This happens for one of two reasons. One, people just don't understand or relate. Even if people can't relate to social work, they can understand what a social worker does. You help people. That's awesome. What does a scientist do? Crickets. The second thing that happens, which I'm wildly trying to prevent, is people are intimidated. You do science?? You must be smart. At this point I just want to pull out my GPA and be like, "See? See that GPA barely floating above a 3?? And that's really only because I padded it with my creative writing classes!" On the vast spectrum of biology majors, I'm definitely on the lower end.

I tend to lean towards the more awkward side of social situations. I'm kind of quiet around new people, and I get slight social anxiety in big groups. Luckily, because I am (sort of, pretending to be) a scientist, I adapt very quickly to new situations. Here are some tips that I use to deal:

Which brings me to the inevitable. Meeting new people, and answering the question, "so what do you do?"

Around scientists this is easy. I may feel slight embarrassment when explaining what I do to molecular biologists, because sometimes there is slight shame in "discovery science" (which there shouldn't be, but that's another topic for another day.) I work in human genetics. It's

But what do you say around people with no science background at all? It's especially hard because my boyfriend is one of those do-gooder types. If we're standing next to each other and someone asks what he does, he says, "I'm starting my masters in social work, and I'm specifically interested in resettling African refugees." To which you just watch people swoon. Everyone knows what social work is, and everyone knows it's such a noble profession where you tend to be over worked and underpaid, so this makes A (the boyfriend) just the bee's knees at social events. And then they look over at me, and I'm all, "human genetics, yo" and a few things happen.

For one, the conversation tends to come to a complete stop. It's actually a great talent of mine, to bring happy light-hearted conversations to a screeching halt. I'm kind of awkward. This happens for one of two reasons. One, people just don't understand or relate. Even if people can't relate to social work, they can understand what a social worker does. You help people. That's awesome. What does a scientist do? Crickets. The second thing that happens, which I'm wildly trying to prevent, is people are intimidated. You do science?? You must be smart. At this point I just want to pull out my GPA and be like, "See? See that GPA barely floating above a 3?? And that's really only because I padded it with my creative writing classes!" On the vast spectrum of biology majors, I'm definitely on the lower end.

I tend to lean towards the more awkward side of social situations. I'm kind of quiet around new people, and I get slight social anxiety in big groups. Luckily, because I am (sort of, pretending to be) a scientist, I adapt very quickly to new situations. Here are some tips that I use to deal:

- Alcohol helps. Alcohol helps all social situations. After a beer or two, it's way easier to introduce yourself and unstressfully describe what you do.

- Make it understandable. This is easier in the human genetics field. I now open with the disease I work on, even though in reality it's secondary to the genetics and the biology I do. I'll usually tack on "genetics", but sometimes I'll skip straight to saying, "I'm studying autism." If you work on chromosome segregation in yeast (Hi Dad!) (And that's even the dumbed down version), and there's no prayer in getting someone to understand what you do, go straight to the disease. "Understanding the mechanisms that cause cancer" works. Cancer! Down Syndrome! etc.

- Make it relatable. Sometimes people just honestly want to know what you do every day. A lot of people's science experience stops at high school, and some people have vague recollections of using pipets or microscopes. If you take pictures of things on microscopes, say that. I've been differentiating neurons from neuronal stem cells lately, and sometimes I'll say, "I'm growing neurons." That's cool. I tend to not mention stem cells, especially at weddings or if I don't know the people very well, because sometimes that gets you to a political discussion. Unless you're into that, of course, then by all means, go ahead.

- Just relax and be yourself. I love what I do. It's why I'm doing it. And if you just forget that you might come off as intimidating or weird or nerdy for a minute, and you just try to convey that you love science, and the creativity, the flexibility, the constant discovery, people will be able to relate to that. Sometimes I just say that my job is awesome because it's so conducive to having a kid. I can make my own hours, and be ready for sick days and teacher work days. My lab isn't too competitive, and everyone has a wide variety of interests. And I love it here. And remembering that you love what you do, well, that's the greatest confidence boost you can get.

So, young padawan, in conclusion, go boldly in to non-scientific social situations. And when in doubt, remember, you're curing cancer.

Monday, June 20, 2011

Monday morning

So I get this email from my post doc at 9:30 on Monday morning.

It's Monday morning! At 9:30! The only thing I have accomplished so far has been turning on my computer, discovering that Chrome was running a little slow, and clearing out my cache.

It's Monday people, let's all act accordingly.

Hey there, I'm working from home today, but I'm going to be talking to [The Big Boss Man aka the PI] so could you please send me some info on what has been accomplished on your end this week....

It's Monday morning! At 9:30! The only thing I have accomplished so far has been turning on my computer, discovering that Chrome was running a little slow, and clearing out my cache.

It's Monday people, let's all act accordingly.

Tuesday, June 14, 2011

A brief overview of sequencing technologies

I started writing a post earlier today about some cool collaborative genetics projects that are going on around the world, and then I realized that these projects all rely on a common knowledge of sequencing. In an effort to not completely sound like a wikipedia article on sequencing, I’m going to just focus on the ones that I use in the lab on a fairly regular basis, and Next-gen sequencing is sort of like, “you seen one you seen ‘em all” type of technology. So I’m going to go through in chronological order, Sanger (or Shotgun sequencing), Next Generation/High Throughput Sequencing (with the emphasis being on Illumina’s method), and then touch briefly on Next-next-gen sequencing (I think they’re calling it third generation now.) Then I’ll take a brief look at how we use sequencing in the lab. Ironically now, we all use High Throughput Sequencing as our first pass through, but everything called in HTS, and I mean everything will get verified through Sanger sequencing, the oldie but goodie.

Shotgun sequencing

Shotgun sequencing

Shotgun sequencing, also known as Sanger sequencing because it was developed by some dude named Sanger. Here’s how it works. You amplify the sequence you want, whether by PCR or a vector in vivo, and then your sequencing reaction contains your purified target sequence, primers for that sequence, DNA polymerase, regulary ol’ dNTPs, and then flourescently labeled dNTPs, which also happen to be chain terminating, which means after one is added, no other dNTPs will be added on after that. Your sequence will stop after a flourescently labeled dNTP is added. See where I’m going with this yet? So you put all that stuff in a tube and start a PCR reaction cycling (I know, I know, polymerase chain reaction reaction, but it’s like ATM machine, you just sometimes need to say the machine after it even though it’s included in the acronym), and what happens is, polymerase works its magic, attaching dNTPs and labeled dNTPs on to the exposed single strand of your target DNA sequence. Except for that, every time a labeled dNTP is added on, that DNA polymerase stops putting on more dNTPs, so it’s a set length. So after x amount of cycles, what happens is you’ll have a whole bunch of fragments of your sequence, all with one labeled nucleotide as the last one on that sequence, like thus:

Your labeled sequences are then sorted by size, electrophoresis, in a capillary tube, and the colors are read off one by one, and the data you get back looks like this:So there you have it. Sanger sequencing. Let’s recap.

Pros: For small sequences? It’s cheap and fast. I can throw my product in a microcentrifuge tube with some primers, walk it down to our sequencing facility, and for 8 bucks, they’ll give me really high quality sequence of about 800 base pairs or so.

Cons: You need to know your target sequence (or at least, enough to amplify, and sequence) which means you need to already know the genomic location, and you need to design primers flanking.

Where it’s been: People got whole genome data from these little dudes. That’s where the shotgunning comes in. You get massive amounts of these short fragments, and then some crazy algorithm + a super computer + someone smarter than me will sit there and align sequence fragments by where they overlap, and construct a whole genome sequence from that. No one does that.

Where it is now: People use Sanger sequencing for much smaller applications now. We use it to verify PCR fragments, verifying vector sequences. We’ll also use it to verify some of our sequence calls that we get from the high through put methods of DNA sequencing.

Next Generation Sequencing (NGS)

Next Generation Sequencing has a couple of aliases. Some people call it High Throughput Sequencing, others call it, well, I spoke too soon. I think people just call it next gen or high throughput. There are a bunch of platforms for it, which is great. Next gen sequencing is the textbook definition of how capitalism works. Competition to come up with the best product for the lowest price is allowing for the development of some genius technologies at prices that are allowing more and more people to use this an integral part of their research. That being said, I’m really only going to talk about Illumina’s platform, because it’s what my lab uses, and it’s the one I’m most familiar with. The actual technologies are a bit different, but the concept of high throughput/efficiency, is the same for all.

There are two big steps in NGS, I like to think of it as “at the bench” and then “at the really scary expensive sequencing machine”, but I’m not requiring that you use my terminology. At the bench is preparing the genomic library, and at the really scary expensive sequencing machine is cluster generation and the actual sequencing.

At the bench

Preparing genomic library: You start out with your genomic DNA, you shear it into more manageable sizes (~300 bp), and you ligate Illumina’s sequencing adapters on to them. This is your first step, and these sequencing adapters will be necessary for the sequencing steps. You then amplify these sequences. The sequence that you use to amplify are the same as the sequence adapters that you ligated on, which ensures that your fragments have both of their ends ligated.

Illumina Sequencing

The Machine: Your prepared genomic library is placed on a flow cell. A flow cell has eight lanes, and in general, one sample is run per one lane.

Cluster Generation: In this part, your single-stranded fragments randomly attach to the inside the flow cell channels, remember those adapters you ligated on to prep your library? Those primers are on the surface of that flow cell.

Unlabeled nucleotides are then added, as well as an enzyme that initiates solid-phase bridge amplification. This just means those free standing ends bend over to find their other primer, like this:

The enzyme also works to then make all of your little single bridges into double stranded bridges. The double stranded molecules are then denatured, leaving only single stranded templates attached to the flow cell (but because you made them double stranded before denaturing, you have complementary strands.) You repeat this about a million times, so you end up with several million dense clusters of double-stranded DNA.

You end up choosing only one sequence adapter, so you result in a cluster of only direction.

The Actual Sequencing

Primers are added plus all four labeled dNTPs, which again, are made so that only one base can be added per cycle. When the first primers, dNTPs, and DNA polymerase is added to the flow cell, a laser is used to excite the fluorescence, and an image is captured of the emitted fluorescence, the images look something like this:

Then you literally, rinse, and repeat. Rinse to remove all the leftover dNTPs that didn’t stick on that last time, oh but then you have to remove the terminator property of the dNTPs that are stuck on to allow further extension, add more dNTPs, and take another picture.

See? Same place (those are your clusters) but a new color, and a new picture is taken.

Cycles continue to give you 76 reads. Computers analyze the image data to give you actual sequence. The cool thing is is that they use astronomy imaging techniques to monitor the same place over time. After you analyze all your millions of clusters to get your reads, you have real whole genome sequence!

Again, this is only just sequence. You don’t know where in the genome it aligns to, only that it existed in your original sequence. People use a variety of programs to align sequences to a reference genome. I’ll go a little into aligning after I briefly touch on a variation on this theme.

Exome sequencing: A lot of times, groups will choose just to sequence the “exome”, that is, all of a person’s exons. The bet is, that this is where the good stuff is going to be, mutations in coding regions are definitely A Very Bad Thing, and also way easier to functionally analyze and decide whether or not it’s a causative bad change. Plus, it’s easy. You add a sequence capture step while you’re preparing your library, so you only capture the exons.

Why exome and not whole genome? The main reason is $$$$$. Exons make up about 50 mb of the genome, as opposed the 3 gb. (mb=megabase, gb=gigabase: that’s 50 million basepairs compared with 3 billion basepairs) It’s about $3k to sequence all the exons of a person with Illumina, about ~$10k to sequence everything. Or something like that. The prices are going down each day though. Plus, the most informative information is coming from your coding regions anyway. A lot of times, when people do whole genome sequencing, they filter out all the noncoding regions anyway. It’s a lot of data, and it’s easier to tackle parts that you know are important. If you can’t find anything then, it’s time to look in noncoding regions. But we know so little about the genome, more information is not always better.

Pros: Next gen sequencing is the crux of "discovery" science, that is, looking for something in the genome that you have no idea where it is, or even if it's there. It generates massive amounts of data which brings me to...

Cons: The shear amount of data that you get from this means that it takes a huge team, huge computer power, to sort through all the data, and there are still large amounts of unaligned sequence that no one can make head nor tail out of. And for all you know, the piece that you're looking for is in that little nugget on your hard drive that you don't know what to do with. And it's expensive.

Caveats

The reference sequence: The “one” reference sequence that people use---in general, as used by the UCSC browser, is this one unknown guy in like, Buffalo, NY. See any issues with that??? There are an insane amount of issues with that. When we use this reference, we are taking a huge huge chance that this is just the normal framework of the human genome. This is really most likely not the case. There are now a ton of databases that are purely dedicated to documented all variation that has been reported in the human genome. dbSNP, 1000 genomes, hapmap, these are huge collaborative projects with the sole purpose to work with and around the fact that people are so different, there’s no way we can have just one reference sequence to align to.

Speaking of aligning, there are two words that people use to describe what they do with their short little sequences from the sequencing machine. They usually say that the next step is aligning or assembling the reference. A lot of the time people use them interchangeably, so much so that it’s pretty much accepted, but it’s not, there’s a subtle difference. Aligning your sequence to a reference implies just that: that based on your sequence, you look at the reference sequence, and see where that piece goes, and you put it there. When you assemble your own sequence, you’re putting the pieces of your sequence together based on the parts of those sequences that overlap each other. That’s how the first “shotgun” sequences were created together.

That concludes this super brief (well, I tried to make it super brief, I’ll give me...a C for effort) background on sequencing. Next, I’ll look at what people are doing with these technologies. Stay tuned for stuff on the 1000 Genomes Project and HapMap.

References: I got most of this from my head, and some powerpoint slides left over from classes I took in college. Unfortunately, my prof cited a post doc, who didn’t cite anything so the google images that I’ve been basing my paint images off of were found here

Thursday, June 9, 2011

Not a blog about autism

I swear, this isn't a blog all about autism, but a recent trio of papers published in Neuron has made the news. (LA Times) They're looking at de novo copy number changes (complete opposite of the last paper I read) and one also did some cool pathway analysis stuff. I might look into them a little more later, but now, off to do some lab work. I purified a bunch of vectors via midi-preps yesterday (~600ug), and I've gotta go send them off for lentiviral packaging.

See ya later!

See ya later!

Wednesday, June 8, 2011

Journal Club: Exome sequencing in sporadic autism spectrum disorders identifies severe de novo mutations

Hey y’all! Welcome to my first ever ever post on this new blog. I’m starting out with a journal club feature--a close reading of an interesting or recently published paper in a field I hopefully know a little about.

Now I’ma let you finish: but hold on a minute. There is a huge, huge assumption that they are making here. Which is that cases that seem sporadic, may not actually be sporadic. Families that have a first child with autism are a lot less likely to have more kids. And if they have two kids who are born with autism there are really really less likely to have more kids after that. (Like those scientific terms I used there?) So discovering cases that are truly familial are really hard. The fact that this hypothesis only focuses on the rare variant model of disease etiology is another shortcoming, but you can’t really fault this paper for that...yet. You can only test on hypothesis at a time, after all. What’s bad is when you limit yourself only to that hypothesis in the results and discussion. But we haven’t gotten there yet. </end Kanye interruption>

Today I’ll be reading and writing and working through this paper. PMID: 21572417

First off, a little bit of background. Autism aka ASD, or Autism Spectrum Disorder, is an extremely complicated disorder, diverse in phenotype, and the genetic etiology is wildly unknown. There aren’t even really many candidate genes that people can agree on yet. We’ll touch more on that later as we get through this paper. This paper, specifically states that, “ASDs are characterized by pervasive impairment in language, communication and social reciprocity and restricted interests or stereotyped behaviors. There have been some GWAS hits for Autism, and there have been a few rare variants of large effect that have also been described, but for the most part the genetic basis for the vast majority of autism cases remains unknown. And for the stuff that is known, it’s a long way away for being useful information to the general public (read: clinical setting). And long way is an understatement. It’s a really really long way away.

Hypothesis

What O’Roak et al. hypothesizes is this: that sporadic cases of ASD, that is, families with only one child with autism with no family history, that seemingly came out of genetic-nowhere, are more likely to be as a result from a de novo, or new, mutation (not inherited from either parent), as opposed to families with multiple affected individuals, which should more likely result from inherited variants.

Now I’ma let you finish: but hold on a minute. There is a huge, huge assumption that they are making here. Which is that cases that seem sporadic, may not actually be sporadic. Families that have a first child with autism are a lot less likely to have more kids. And if they have two kids who are born with autism there are really really less likely to have more kids after that. (Like those scientific terms I used there?) So discovering cases that are truly familial are really hard. The fact that this hypothesis only focuses on the rare variant model of disease etiology is another shortcoming, but you can’t really fault this paper for that...yet. You can only test on hypothesis at a time, after all. What’s bad is when you limit yourself only to that hypothesis in the results and discussion. But we haven’t gotten there yet. </end Kanye interruption>

Methods

20 Autism trios: this is generally two parents and an affected kid. Their clinical evaluations can be found in the supplementary information. Here’s another source of bias. ASD is such a diverse disorder, thus the inclusion of the word “spectrum” in it’s name. As stringent and as uniform as the diagnostics manual tries to be, there is still a lot of wiggle room that is so dependent on the clinician. I know a clinician that pretty much diagnoses anyone who’s the least bit weird as BAP--or Broad Autism Phenotype. If one clinician where to evaluate the parents as having BAP, and then the kid as ASD, that’s like, two weird parents having a super weird kid, that could severely affect the interpretation of the data. Because then you’re not looking for a rare de novo mutation. Then you’re looking for maybe two subtle effect, perhaps common variants, that together compound to a stronger phenotype in the kid. Just, you know, a pitfall of limiting yourself to the rare variant model. Agh sorry, back to the methods.

20 Autism trios.

They did aCGH on them all, and found no large CNVs, except for in one---a maternally inherited deletion--remember? They used trios, so they have data on the mom dad and the kid, important in discovering origin. This is good because there aren't that many great algorithms (yet) to call copy number variations based on exome sequencing results (read depth, etc.) Some groups are working on it, but I don't think it's quite there yet.

Then they did exome sequencing. That is, sequencing the all the exons. (Because that’s the most likely place for a rare protein-altering variant to be, right?)

Filtering methods: They threw out all variants previously observed in dbSNP, the 1000 Genomes Project, and other exome sequencing data they had. The identified <5 de novo candidates per trio, and validated them using Sanger sequencing.

Results

1) The overall protein-coding de novo rate per trio was higher than expected.

2) Using two independent quantitative measures, the Grantham matrix score, for the nature of the amino-acid replacement, and the Genomic Evolutionary Rate Profilng (GERP) for the degree of nucleotide-level evolutionary conservation, they concluded that the de novo mutations they found where subjected to stronger selection and are likely to have functional impact.

3) 4 out of the 20 trios had “disruptive de novo mutations that are potentially causative, including genes previously associated with autism, intellectual disability and epilepsy.”

4) These genes lead to ASD presentation (they go more in depth in the paper, I’m pulling out basically the last sentence of each paragraph that they do.)

a) “Our data suggest that de novo mutations in GRIN2B may also lead to an ASD presentation.

b) “SCN1A was previously associated with epilepsy and has been suggested as an ASDs candidate gene.”

c) “Additional study is warranted, as laminins have structural similarities to the neurexin and contactin-associated familes of proteins, both of which has been associated with ASDs.”

d) FOXP1 encodes a member of the forkhead-box family of transcription factors and is closely related to FOXP2, a gene implicated in rare monogenic forms of speech and language disorder.

Discussion

There wasn’t overwhelming evidence showing excessive burden of mutations in ASD candidate genes. The people that they found potentially causative de novo mutations were all the most severe cases. That is, most had a pretty severe intellectual disability, and features of epilepsy. And also, more importantly, the genes that they did identify with de novo mutations had also been “disrupted in children with intellectual disability without ASD,” to which they acknowledge, “provides further evidence that these genetic pathways may lead to a spectrum of neurodevelopmental outcomes depending on the genetic and environmental context.”

Way to cover your butts, y’all. It might be autism but it also might not.

Here are my thoughts.

First of all, this paper cannot prove causality. Just because someone has a deleterious allele does not mean that that is the cause of the disease that they have. It could be a silent mutation. They could have one good copy. One good copy might be enough to carry you through with no bad affects. Their conclusions of these genes leading to a presentation of autism is purely based on candidate genes and what’s been seen before. The conclusion that de novo mutations may contribute substantially to the genetic etiology of ASD...doesn’t really hold up here, because, well, they haven’t proved that these are the causal mutations.

We know that exome sequencing works in finding new mutations. It’s brought us a long way. This same group brought us the genetic cause of a new Mendelian disease by only sequencing the exomes of 4 people. Exome sequencing works for Mendelian diseases, plain and simple. Therefore, it would be safe to say that exome sequencing also would work for cases of autism that appear to be mini-Mendelian disorders, that is, an ASD phenotype, but a little more severe, so that it happens to be caused by a protein-altering mutation.

However, exome sequencing, and this study in general (again, in my opinion) does not add any new information to the genetic etiology of autism. Correct me if I’m wrong. Did you see a new pathway illuminated? Did you see a really large sample size and a really small p-value? Yeah, there were some pretty looking genes that coded for like, sodium channels, and things that look like neurons, but there was simply not enough numbers, and not enough molecular follow up to claim what they claimed from the start.

In my opinion, and this is truly my own opinion, and sometimes I have opinions on things I’m not professionally trained in but instead on things I’ve read about on the internet, this paper got published because people are so hungry for any inkling as to what causes autism. And their methods, well, are really inoffensive. Sequencing has worked in the past. And it will work again. It will find variants that are rare, and do have severe phenotypic effects, however, it’s not going to help that much with disorders like autism. Or rather, it will help in the cases of autism that act like Mendelian diseases. But being able to explain a large amount of cases that occur, and a lot of the less severe cases that occur? We’re still waiting for that one.

Subscribe to:

Posts (Atom)